Ontario Tech University STEAM-3D Research Lab

“We have used the ideas below in Workshops for Educators and in AI & Science Camps for Students. AI is a fast-changing field with increasing impact on our society. Educating ourselves and our students about AI is of tremendous importance.”

Janette M. Hughes, Canada Research Chair, Ontario Tech U

ABOUT THE AI WORKSHOP

- For students (enrichment; remediation), teachers (professional learning; online teaching resource) & parents (AI awareness)

- Self-serve workshops: pick and choose activities to complete

- A. What is AI?

- B. How do machines think?

- C. Where is AI in our society today?

- D. What are some AI issues?

- E. Meehaneeto – an AI story

AI stands for Artificial Intelligence.

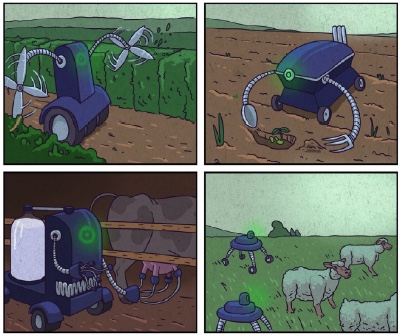

Some AI has a physical form (like an agricultural robot) and some AI only has a digital (like software — computer code — that can makes financial investment decisions). In both cases, it is the computer code that creates what appears to us to be “intelligence”.

A.1. PHYSICAL AI

Here are some physical examples of AI:

- robots used in manufacturing

- self-driving cars

- autonomous surveillance/security drones

- personal assistant/companion robots

- agricultural robots

Do an Internet search

- What other examples of physical AI can you find?

- Research a specific example of physical AI (like robots used in agriculture or in manufacturing) to better understand the variety of applications and their impact.

A.2. DIGITAL-ONLY FORMS OF AI

Here are some examples of digital-only AI:

- automated financial investing

- tools for processing natural language

- image recognition

- chat bot

- monitoring of social media interactions

Do an Internet search

- What other examples of digital-only AI can you find?

- Research a specific example of digital-only AI (like chat bots) to better understand the variety of applications and their impact.

A.3. NATURAL VS ARTIFICIAL

Humans, as well as animals, have natural intelligence. They are born with the capacity to think and to learn. They have goals, they have senses and emotions to perceive their environment, and they have the capacity to make choices to help meet their goals. Humans also have a consciousness, or an awareness of self.

Machines can be designed, or programmed, to have some form of artificial intelligence, that mimics intelligent human behaviours, as well does things that humans cannot do.

A.5. STRONG vs WEAK AI

Strong AI, also known as Artificial General Intelligence (AGI), refers to the ability to learn, understand and act like a human.

Weak AI (also known as Narrow AI) performs pre-programmed tasks or creates expert systems, such as medical monitoring and diagnosis.

Do an Internet search

- What do experts say about AGI?

- What are the predictions about AGI development?

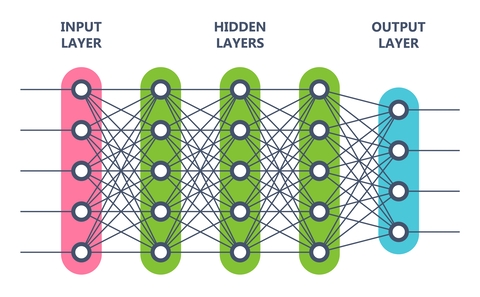

A.6. machine learning, DEEP LEARNING & neural networks

Machine learning is a sub-field of AI. Machine learning tries to mimic human learning. It uses labelled data set and learns based on feedback to improve performance and accuracy.

Deep leaning is a branch of machine learning. It relies less on humans to label data, and is designed to self-categorize data based on attributes it notices.

Neural networks (which are not “neural” in the human sense) are a branch of deep learning. Neural networks make decisions based on weighted probability theory (Bayesian probability theory). Imagine a web of paths with intersecting points (or nodes). Nodes that perform to an acceptable level become active and send data to the next node. Nodes that do not perform well become inactive.

Do an Internet search

- What are the uses of machine learning?

- Is machine “learning” like human learning?

- What is Bayesian probability theory?

B.1. MAKING DECISIONS

One of the simplest way to make machines become smart, is by giving the ability to make decisions.

For example, here are some decisions you make daily, using IF / THEN logic, without having to think very hard:

- IF it’s cold THEN

- wear mitts and a toque.

- IF it’s raining THEN

- Wear a raincoat OR use an umbrella.

B.2. Machine logic

Machines use similar logic to make decisions. For example, if you have set your smartphone alarm for 7 am, it will “think” something like this:

- IF the alarm is set AND it’s 7 am THEN

- Activate the alarm.

B.3. Conditional statements

IF / THEN statements are called conditional statements, and they are used in code to make decisions. [sections B.3-B.4 adapted from Animal Farm (Gadanidis, Hughes & Gadanidis, 2021/2018)]

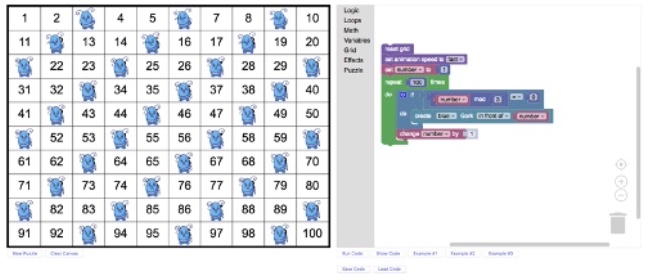

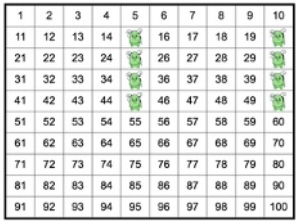

Below, you will use a coding app (available at https://learnx.ca/sets) to highlight number patterns on a grid with conditional statements.

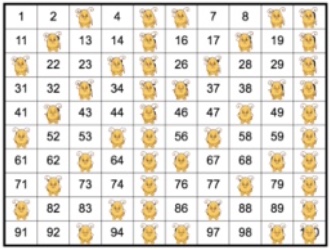

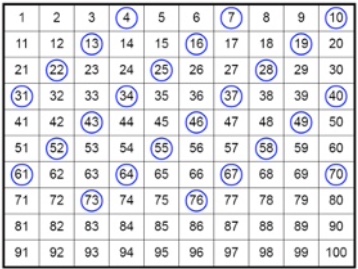

B.4. Sets & Subsets on a grid

Sets of numbers are beautiful, especially on a grid.

B.4.a.

- Go to learnx.ca/sets. Click on Example 1.

- Notice that the conditional statement if-do determines which numbers are decorated.

- Alter values and click Run Code to see the effect. For example, change the Repeat number of the character colour.

- What do you think number mod 3 = 0 means?

- Alter the code to get the pattern below.

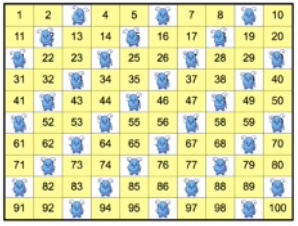

B.4.b.

- Go to learnx.ca/sets. Click on Example 2.

- Run the code to see the pattern below.

- Notice the conditional statement if-do-else in the code.

- Alter the code to get the pattern below.

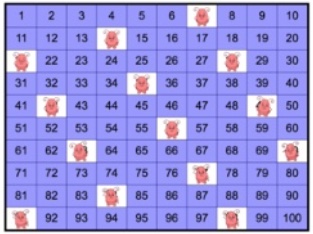

B.4.c.

- Go to learnx.ca/sets. Click on Example 3.

- Run the code to see the pattern below.

- What is the purpose of the conditional code if __ and __ ?

- Alter the code to get the pattern below.

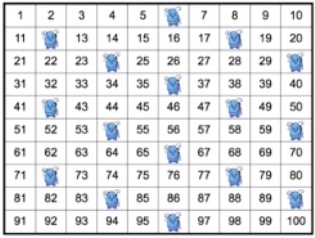

B.4.d.

- Go to learnx.ca/sets. This app has built-in puzzles to challenge you.

- Puzzles look like the one below, where the number pattern you have to match is circled.

C.1. PERSONAL ASSISTANTS

Do an Internet search to find the features and capabilities of common personal assistants. A partial list is shown below.

- Apple’s Siri

- Google’s Google Assistant

- Amazon’s Alexa

- Microsoft’s Cortana

What other personal assistants are there?

- Compare and contrast these personal assistants based on their features.

- Which of these would you pick as your favourite, and for what reasons?

- If you could add a new feature to the personal assistant of your choice, what would the feature be?

C.2. AGRICULTURAL ROBOTS (AGBOTS)

There are agbots for a wide variety of agricultural tasks. Below is a partial list.

- Harvesting crops

- Controlling weeds

- Seeding and planting

- Irrigation

- Monitoring livestock

- Sorting and packaging agricultural products

Do an Internet search to find 3 of the most recent agbot advances.

- Describe how the advances improve what agbots can do

- Discuss how the agbot advances may change agriculture

C.3. MILITARY AI

Many countries are developing AI for military use.

General John Murray of the US Army Futures Command told an audience at the US Military Academy last month that swarms of robots will force military planners, policymakers, and society to think about whether a person should make every decision about using lethal force in new autonomous systems. Murray asked: “Is it within a human’s ability to pick out which ones have to be engaged” and then make 100 individual decisions? “Is it even necessary to have a human in the loop?” he added.

Wired (2021)

Read the article The Pentagon Inches Toward Letting AI Control Weapons in Wired (2021).

- How is the military planning to use AI?

- Should AI make its own targeting decisions?

- Do an Internet search to find other military uses of AI.

C.4. WHAT ELSE?

Do an Internet search on uses of AI to find other areas where AI is used.

- What may be some benefits of using AI?

- What may be some drawbacks of using AI?

D.1. BENEFITS & RISKS OF AI?

Max Tegmark is President of the Future of Life Institute & Professor of Physics at MIT

… the concern about advanced AI isn’t malevolence but competence. A super-intelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we have a problem.

Max Tegmark, Future of Life Institute

Read Max Tegmark’s article at the Future of Life Institute.

- What are the key ideas in this article?

D.2. USING AI FOR GOOD?

Bernard Marr is an internationally best-selling author, popular keynote speaker, futurist, and a strategic business & technology advisor to governments and companies

Amid the cacophony of concern over artificial intelligence (AI) taking over jobs (and the world) and cheers for what it can do to increase productivity and profits, the potential for AI to do good can be overlooked.

Bernard Marr’s article in Forbes

Read Bernard Marr’s article in Forbes.

See also this video.

- What AI “for good” Marr identify?

- What are the implications for society?

D.3. DEVELOPING HUMAN-FRIENDLY, SOCIAL AI?

Mark H. Lee is an Emeritus Professor of Computer Science at Aberystwyth University in Wales, and the author of How to Grow a Robot.

Developmental robotics is a theory that he explores in his latest book, How to Grow a Robot. He argues that artificial intelligence, more specifically Deep Learning, is the foundation on which robots will be built in the future.

But he notes that there are limitations to current AI. “Most programs don’t really understand what they’re doing. So you may translate text, you might recognize images, but you don’t really know what those images are about, or what the text really means.”

And, he says, that’s a big problem when it comes to using them in many real life applications that really matter, like driverless cars.

Most AI study the already developed adult human brain. But the brain develops through experience and much of the adult brain has formed through earlier experience. A child learns to control its limbs and sensory systems over time and picks up new skills along the way.

The question is how this sort of learning is generated, says Lee. If we knew that, we wouldn’t need big data. “We would be learning through experience.”

Lee says curiosity might be the answer. He applied this theory in his own work. “We ran our robot through this process, from no abilities at all, to be able to pick up objects that he sees on the table and move them around.”

“Anything that is novel that it has not been seen before is exciting, a stimulus. And he tries to repeat the action that caused that stimulus, if at all possible.”

“I see robots as a way of exploring the degree to which computers can reach out to humans, and I don’t see it as as far reaching as a lot of science fiction would do, and a lot of over-hyped AI work would suggest, but I do think there’s a lot of potential there for a robot to become grounded in the world,” says Lee.

Mark H. Lee in CBC.ca News

Read the article at CBC.ca News.

- How may human-friendly AI develop?

- Can AI be “curious”?

D.4. GOOGLE’S PRIVACY BACKPEDAL?

Greg Bensinger, member of the NY Times editorial board writes:

There is a saying in Silicon Valley that when a product is free, the user is the product.

That’s a diplomatic way of describing what amounts to tech companies’ cynicism toward their own customers. Time was, companies worked to meet customer needs, but tech businesses have turned that on its head, making it the customer’s job to improve their products, services, advertising and revenue models.

With little regulatory accountability, this pursuit is a particular fixation for the biggest tech companies, which have the unique ability to pinpoint customers’ every online move. As part of this economy of surveillance there is perhaps nothing more valuable than knowing users’ locations.

So it was that Google executives were dismayed over a most inconvenient discovery: When they made it simpler to halt digital location tracking, far too many customers did so. According to recently unredacted documents in a continuing lawsuit brought by the state of Arizona, Google executives then worked to develop technological workarounds to keep tracking users even after they had opted out. So much for the customer always being right.

Rather than abide by its users’ preferences, Google allegedly tried to make location-tracking settings more difficult to find and pressured smartphone manufacturers and wireless carriers to take similar measures. Even after users turned off location tracking on their devices, Google found ways to continue tracking them, according to a deposition from a company executive.

Greg Bensinger in the NY Times.

Read Greg Bensinger’s article in the NY Times.

- What are the issues raised?

- Is Google an exception or an example?

- How might you protect your online privacy?

D.5. AI AS A THREAT TO THE HUMAN RACE?

Stephen Hawking on BBC, speaking on the topic of “AI could spell end of human race.”

D.6. AI is a threat to DEMOCRACY?

Amy Webb, futurist and NYU professor, says:

In the US, Google, Microsoft, Amazon, Facebook, IBM, and Apple (the “G-MAFIA”) are hamstrung by the relentless short-term demands of a capitalistic market, making long-term, thoughtful planning for AI impossible. In China, Tencent, Alibaba, and Baidu are consolidating and mining massive amounts of data to fuel the government’s authoritarian ambitions.

If we don’t change this trajectory, argues Webb, we could be headed straight for catastrophe. But there is still time to act, and a role for everyone to play.

Amy Webb (2019) in MIT Technology Review.

Read Amy Webb’s article in MIT Technology Review.

- What AI trends does Webb identify?

- What are the implications for society and for democracy?

- What can be done to protect our democratic processes?

D.7. AI COMPANy Interests at odds with ours?

AI development is integral to the work of Google & Facebook, for example, and increasing in importance.

Read the news reports about Google & Facebook quoted below.

- What are the issue raised?

- Research these issues further, by finding reports from other sources.

- How do these issues impact our society?

According to The Guardian (2019):

Google has made “substantial” contributions to some of the most notorious climate deniers in Washington despite its insistence that it supports political action on the climate crisis.

It donates to such groups, people close to the company say, to try to influence conservative lawmakers, and – most importantly – to help finance the deregulatory agenda the groups espouse.

How AI algorithms work

According to The Guardian (2021):

Researchers have long complained that little is shared publicly regarding how, exactly, Facebook algorithms work, what is being shared privately on the platform, and what information Facebook collects on users. The increased popularity of Groups made it even more difficult to keep track of activity on the platform.

“It is a black box,” said González regarding Facebook policy on Groups. “This is why many of us have been calling for years for greater transparency about their content moderation and enforcement standards. ”

Meanwhile, the platform’s algorithmic recommendations sucked users further down the rabbit hole. Little is known about exactly how Facebook algorithms work, but it is clear the platform recommends users join similar groups to ones they are already in based on keywords and shared interests. Facebook’s own researchers found that “64% of all extremist group joins are due to our recommendation tools”, an internal report in 2016 found.

AI ethics; AI research

According to BBC (2020):

An open letter demanding transparency has now been signed by more than 4,500 people, including DeepMind researchers and UK academics.

Google denies Timnit Gebru’s account of events that led to her leaving the company.

She says she was fired for sending an internal email accusing Google of “silencing marginalised voices”.

She sent the email after a research paper she had co-authored was rejected.

[…] Signed by staff at Google, Microsoft, Apple, Facebook, Amazon and Netflix as well as several scientists from London-based AI company DeepMind – owned by Google parent company Alphabet – the open letter urges Google to explain why the paper was rejected.

“If we can’t talk freely about the ethical challenges posed by AI systems, we will never build ethical systems,” DeepMind research scientist Iason Gabriel tweeted.

D.8. OTHER ISSUES WITH AI?

Do an Internet search to find other reports on the above issues, as well as other issues, about AI.

- What are the issues?

- What are opposing views?

- What is your perspective?

- What may be done about them?

- How can you help?

Read the AI story Meehaneeto (Hughes & Gadanidis, 2021).

- What is Meehanneto?

- What did you learn from the story?

- How did you feel?

- What else do you want to know?

- What might you do differently if you were a character in this story?

- What might happen next in this story?

- What in the story is similar to our world?

- What is different?

- What might you do to help?